Cost Optimization on AWS

Introduction – How to reduce your AWS Cost?

Every Enterprise in this world is looking forward to migrate their workload to public cloud from on-premises to reduce cost of their infrastructure. Public cloud provide managed services which offload customers operational burden thus reduces operational cost as well. Running your workload on the cloud is easy and can be expensive sometimes if you do not pay attention on controlling the cost on your AWS environments. Always look for reducing the cost on AWS resources running your workload. AWS provide several tools for you to review and track cost of your AWS resource such as Trusted Advisor, Cost Explorer, AWS cost and usage report, AWS budgets etc.

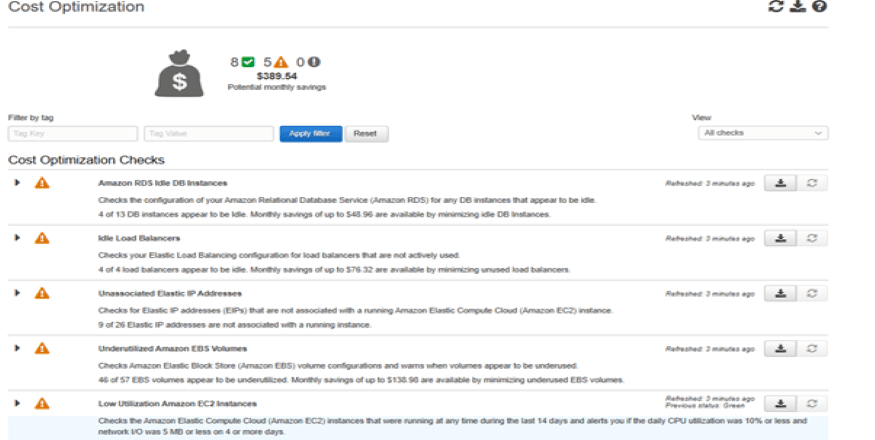

Trusted Advisor is one of the key tool should be considered for cost optimization. Trusted Advisor can give you an idea of estimated potential saving per month for your AWS resources. Explore your AWS cost and usage report, find out on which AWS service you are spending more. Deep dive into AWS cost and usage report to get usage of resources in every domain such as compute resource cost, storage cost, data transfer cost etc.

Let me take you through some of the techniques which can help to reduce cost of your AWS resources.

Compute Resources Cost

Trusted Advisor

Choosing the right pricing model and right size instance, EC2 provide different pricing model like On-demand pricing, Reserved Instance pricing, and Spot pricing. Choose a pricing model which fits your need. If you need to run the workload for longer time, lets say for few years then take advantage of Reserved Instances which comes at cheaper price than on-demand instance. Once you choose pricing model and instance size for your workload, regularly monitor your instances to assess if they are best aligned to your workload. Use AWS compute optimizer recommendation to upsize or downsize your instance and trusted advisor recommendation on low utilization of EC2 instances. Trusted advisor provide you list of EC2 instances with their low CPU utilization along with potential savings.

As given in below screen shot, look out on the recommendation given by Trusted Advisor.

- Reduce size of your EBS volume if they are underutilized

- Release Unassociated elastic IP Address

- Shut down the idle load balancers

- Keep deleting EBS snapshots which are not require anymore.

- Remove the unnecessary nodes from redshift cluster.

Instance Scheduler

Use instance scheduler to regularly stop your instances. If your workload is required to run in weekdays like 8*5 then use instance schedule to start every day when work start and stop at end of the day. AWS provides readymade cloud formation template to provision instance scheduler on your AWS environment. You can use this template and quickly provision instance scheduler or you can build you own solution with help of AWS Lambda function.

Saving Plans

Saving Plans is another great way to save upon some cost for your compute resources. Use EC2 compute saving plan provide more flexibility for EC2 instances regardless of region, operating system, instance family or tenancy. EC2 compute saving plan can also be applied to AWS Fargate for both ECS and EKS cluster and AWS Lambda. It can provide you around 60% savings. AWS Cost explorer can help you guide and purchase saving plans based on the recommendation it provides.

Tag your AWS resources

Tagging is also an important aspect. It helps in organizing and managing your resources. Provision automatic tagging in AWS environment based on Cost Center, so when any resources is created it should be automatically tagged. Cloud watch event and lambda function can be one of the solution to implement tagging. For Example, each AWS service publish event to cloud watch event. EC2 service publish events like EC2 instance state-change notification for start, stop, terminate, run etc. Create CloudWatch event rule based on change in EC2 instance behavior such as start instance, add lambda function as a target to add tags on that EC2 instance upon start. Similar to above example there can be many ways to automate tagging to organize your resource for controlling cost.

Use AWS Organization

If you are having multiple accounts, then use AWS organization with all features. All features also include consolidated billing which can provide savings on reserved instance for EC2, RDS, Redshift and S3 data storage. Consolidate bailing feature round up the cost for EC2 instance and storage across all accounts. It can provide you some cost savings on compute and storage usage. Use Service control policy (SCP) to provide least privileges to different accounts. For Example, apply SCP for account’s IAM user to avoid spinning up large or extra-large EC2 instances.

Use Cloud Native/Serverless Services

Use Serverless compute services if possible as these are managed services and you need to pay only for what you use. Regularly keep reviewing your architecture to replace EC2 instance with AWS Fargate or AWS Lambda functions. Using serverless services can provide you lots of saving. Customers mostly look to lift and shift their application on EC2 while to reduce cost and improve performance customer should always consider cloud native services like API gateway, Lambda, Dynamo DB, SQS, SNS, Aurora Serverless etc.

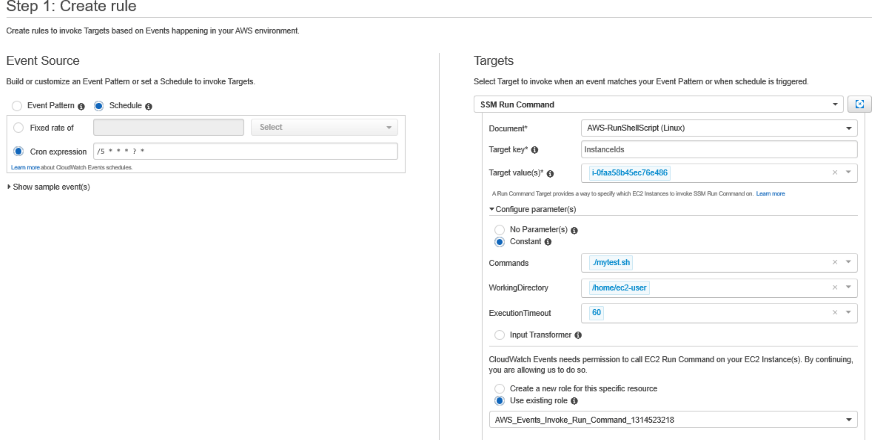

Let me give you an example which has saved around 1000$ per year for below use case. One of the customer was using a COTS product for scheduling their job on-premises server, during the migration they were still looking to use same scheduler on EC2 and they were looking for new licenses. Meanwhile I just figured out why not use AWS CloudWatch event rule to schedule a job. AWS CloudWatch event rule provide CRON based scheduler to trigger any supported AWS service as target. Current scheduler were scheduling shell script based job on certain time on Linux server. I advised the customer to use AWS CloudWatch event rule to schedule these scripts by AWS system manager run command as target of rule. AWS cloudWatch event rule is a free service for which you only have to pay very minimal amount like $1 for event invocation. With the implementation of this solution, customer was quite happy that it saved dollars for them as well as eliminated dependency to co-ordinate with vendor for new licenses or for support required during installation and Configuration scheduler on server. So key is to look for opportunity to eliminate use of licensing based software. There are many ways to adopt AWS cloud native services.

Below given screen shot of executing scheduled shell script via run command on EC2 instance.

Upgrade Instances To New Generation

Upgrade instance to new generation instance type. New generation instance type provide better performance and cost saving than old generation instance type. I had come across with one of the our client, They had been using hundreds of EC2 instance with old generation instance type such as m4 and t2 when we suggested them to use m5 and t3 they have really started getting better performance at lower cost. AWS keep releasing new generation instance to offer better performance, so always look for opportunity to upgrade to new generation instances.

Evaluate Data Transfer Cost

Below are the data transfer charges you need to consider.

Data transfer from AWS service to Internet is charged while data transfer from Internet to AWS service is free.

Data transfer cross regions is charged and Data transfer between EC2 instance across availability zones are charged.

Data transfer between EC2 instance in same availability zone using private IP address and data transfer between EC2 and S3 within same region is free. Use Private IP address to communicate your EC2 instances with other EC2 instances and other services.

When you transfer data between EC2 and below service within same region it is free.

- S3

- Glacier

- Dynamo DB

- Simple DB

- SQS

- SES

When you transfer data between EC2 and below service across availability zoned it is charged.

- RDS

- Redshift

- ELB

- ENI

So design your AWS architecture in such a way that you minimize inter-region and inter-availability zones data transfer. For example, When you are transferring data between 2 EC2 instances in different availability zone, it incurs data transfer cost. You can use S3 in between to avoid data transfer cost as data transfer between EC2 and S3 is free within same region. Data transfer cost is dependent on region. So choose your region wisely when planning to deploy your workload in AWS environment. Data transfer cost can vary significantly among regions. If specific region is not required to run your workload choose a region which provide AWS resources at lowest cost.

Use CloudFront a content delivery network service to avoid transfer large files many times to the internet. Data transfer from any AWS service to CloudFront is free. CloudFront can save on data transfer cost from your both EC2 and S3 bucket.

Storage Cost

S3 Analytics

Use S3 analytic – S3 storage class analysis to analyze storage access pattern for your data. It will help you to identify which objects are access frequently and which are accessed infrequently. Once you identify pattern for objects accessed infrequently, then you can use S3 lifecycle policy to automate transition of such objects based on their pattern into low cost storage class like S3-IA from S3 standard. You can enable storage class analysis for the entire bucket, shared prefix, or tags. Use S3-Intelligent Tiering storage class which automatically handle transition from S3 standard to S3-IA and vice versa for frequently and infrequently accessed objects. Once you start using this definitely you will be able to reduce your S3 storage cost.

Logs Management Policy

AWS services configured with logging store their logs in either CloudWatch logs or in S3 such as Elastic load balancer logs, CloudFront logs, Route 53 logs, CloudTrail logs, VPC glow logs etc. Review your logs files stored in S3 bucket regularly and transfer them in low cost storage glacier if they are needed in future for some compliance purpose or delete them if logs files not needed at all. For example, if you have an application on EC2 instance behind elastic load balancer with enabled access logs and if you deploy new version of your application then access logs kept in S3 bucker from old version would not be required any more so this can be deleted from S3 bucket to save cost. Hence having proper logs management logs policy can provide you cost saving on your logs stored in S3 and CloudWatch logs.

RDS snapshot

keep checking up on your database backup strategies to properly manage your automated backup and manual snapshots. If are taking manual snapshot of your database at regular interval to meet your RTO and RPO for disaster recovery and in case of failure of availability zone then disable automated backup to save backup storage cost.

Dynamo DB table

Check your Dynamo DB table read and write capacity units, are they being consumed?

Monitor your consumed and provisioned read and write capacity unit matrices in cloud watch. If they are underutilized then reduce provisioned capacity unit for both read and write or use Auto scaling with provisioned throughput. Auto scaling feature for provisioned capacity will automatically increase or decrease your read and write capacity which will significantly reduce cost for non utilized provisioned capacity. Another better option is to use on-demand capacity instead of provisioned capacity if workload is unpredictable, with On-demand option you pay for what you use for read and write capacity units.

Others

Give least privileges like region wise or service wise: another way to reduce cost is to give least privileges so IAM user will not be able create resources. Apply resource level permission in IAM policies when assigning to users, groups and roles. Use custom policies as much as you can use for IAM entities.

Keep yourself up to date with new AWS service releases and new feature being added in exiting service. AWS Constantly reviewing their existing service by making enhancements and launches new services at regular interval for different use cases, so that you can make changes in your current architecture for better performance and cost saving.

Final thoughts on AWS Cost Optimization

AWS cost optimization is an ongoing process. Your AWS Cloud resources needs to be monitored at all times to identify when resources are being under-utilized (or not utilized at all) and when opportunities exist to reduce costs by deleting/terminating/releasing zombie resources. Consider all the best practices for each services AWS suggests. Key is to use recommendation of trusted advisor and cost explorer. Review your workload to get it align with AWS well architected framework.

I hope you would have liked this article. Go and start monitoring the cost of AWS resources.

Happy learning!!!